Even the most novice among website owners has at some point or other tested their website performance. However, most of these tests normally focus on loading speed or user experience indices.

- Wsst WebSockets Stress Test - Tool written in NodeJS that allows to make a stress test for your application that uses WebSockets. You can create behavior scenarios that tool will run on every connection in test.

- Artillery is a load testing and smoke testing solution for SREs, developers and QA engineers. Prod – fast & reliable, users - happy. $ npm install -g artillery. Artillery has been an invaluable tool for the SRE team as we continuously look to improve our platform's reliability and resiliency.”.

OCCT is the most popular all-in-one stability check & stress test tool available. OCCT is great at generating heavy loads on your components (CPU, GPU, Memory, GPU Memory & Power supply), and aims at detecting hardware errors or stability issues faster than anything else.

But what about load testing?

Although most websites are prone to traffic levels that are usually quite regular, there may be occasions when some sites will have to deal with heavy loads. Examples of these include online stores, or even some government websites.

If your website gets an unexpected spike in the number of visitors over a short period, how well are you equipped to handle it?

Understanding Load Testing

What is load testing?

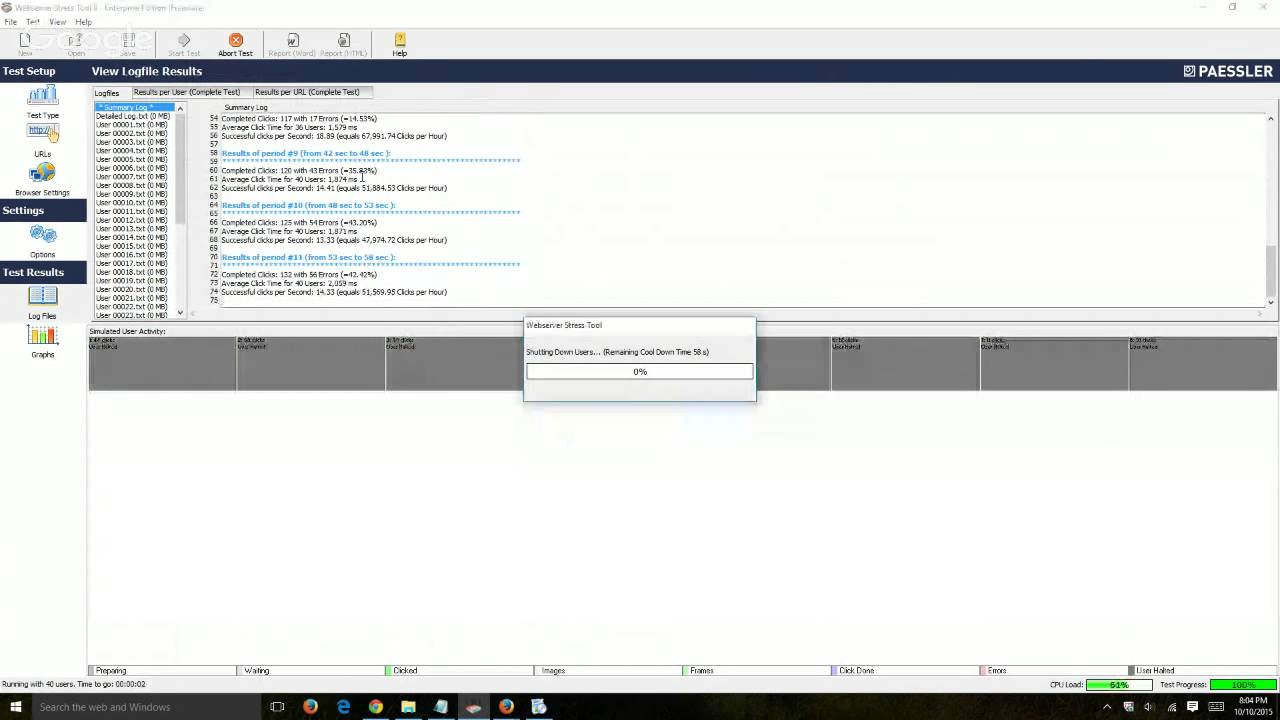

Load testing is bench-marking a website to see how it performs under various loads.

For example, a test may simulate an increasing number of concurrent visitors landing on your site. It will also record how your site handles them and records them for your reference.

What types of “load” are tested?

Depending on the tool you choose to load test your site with, each may come with different features. The most basic will simply involve simulating an ever increasing load and halting when your site crashes.

Other tools may be capable of generating a simulated load that mimics different user behaviour, such as performing queries, changing pages, or loading other functions. Some may even be able to map out logical flows for each individual scenario.

Load Testing Tools to Consider

Depending on their complexity, some load testing tools can be quite expensive. However, there are cheaper options in the market and some are even free for use. I’ve included a mixture of these below for your reference, including a couple of open source options.

1. Loadview by Dotcom Monitor

Price: From $199/mo, free trial available

Loadview is one of the more complete solutions available in the market and today is based on a cloud service model. This means that whatever type of simulation you need from them, you only pay for the service – there is zero investment in hardware or anything else.

Feature wise, Loadview offers a very complex solution that can include anything from straight up HTTP load tests to a sophisticated mix of your choice. It is able to simulate dynamic variables and even geo-location diversity in its tests.

Features

- Post-firewall tests

- Handles dynamic variables

- Detailed waterfall charts

- Load test curves

2. K6 Cloud (formerly Load Impact)

Price: From $49/mo

K6 is a cloud-based, open source load testing tool that’s provided as a service. One of the things that makes this tool interesting is that it is priced on a variable-use model which means that the cost of entry can be relatively low depending on your needs. It is, however, mainly developer-centric.

Aside from load testing, K6 also offers performance monitoring. Its load testing side is focused on high loads and can handle various modes such as spikes, stress testing, and endurance runs.

*K6 does not run in browsers nor does it run in NodeJS

Features

- Developer-friendly APIs.

- Scripting in JavaScript

- Performance monitoring

3. Load Ninja

Price: From $270.73/mo

Load Ninja lets you load-test with real browsers based on recorded scripts and then helps analyze performance results. Its use of real browsers at scale means that this tool helps recreate a more realistic environment and end result for testing.

Results can be analyzed in real-time and thanks to the handy tools the system provides, your scripting time can be reduced by as much as 60%. Internal applications can be tested as well, both with proxy-based fixed IPs or your own range of dynamic IPs (by using a whitelister).

Features

- Test with thousands of real browsers

- Diagnose tests in real-time

- Insights on internal application performance

4. LoadRunner by Micro Focus

Price: From $0

With an entry-level free community account that supports tests from 50 virtual users, LoadRunner is available even to the newest website owners. However, if you scale it up to high levels the cost rises exponentially.

This Cloud-based service also offers the use of an Integrated Development Environment for unit tests. It supports a wide range of application environments including Web, Mobile, WebSockets, Citrix, Java, .NET, and much more. Be aware that LoadRUnner can be pretty complex and has a steep learning curve.

Features

- Patented auto-correlation engine

- Supports 50+ technologies and application environments

- Reproduces real business processes with scripts

5. Loader

Price: From $0

Compared to what we’ve shown so far, Loader is a much simpler and more basic tool. Its free plan supports load testing with up to 10,000 virtual users which is enough for most moderate traffic websites.

Unfortunately you will need to have a paid plan to access more advanced features such as advanced analytics, concurrent tests, and priority support. It is easy to use though since basically you just add your site, specify the parameters, then let the test run.

Features

- Shareable graphs & stats

- Useable in a GUI or API format

- Supports DNS Verification and priority loaders

6. Gatling

Price: From $0

Gatling comes in two flavors, Open Source or Enterprise. The former lets you load-test as an integration with your own development pipeline. It includes both a web recorder and report generator with the plan. The Enterprise version has on-premise deployments or alternatively, you can opt for a Cloud version based on Amazon Web Services (AWS).

Although both of these versions are feature-packed, the Enterprise version supports a few extras that don’t come with Open Source. For example, it has a more usable management interface and supports a wider range of integrations.

Features

- Multi-protocol scripting

- Unlimited testing and throughput

- Gatling scripting DSL

7. The Grinder

Windows Build Tools Npm

What to Check for When Load Testing?

As the very name implies, your core function should be the basic of how your site performs under loads. This will let you observe a number of things such as:

- At what point your site performance starts to degrade

- What actually happens when service degrades

When I mentioned how different sites may react differently based on their architecture, that was a signal meant for you to understand that not all sites fail in the same way as well. Some database-intensive sites might fail on that point, while others may suffer IO failures based on server connection loads.

Because of this, you need to be prepared to set up a variety of tests to understand how your site and server will cope under various scenarios. Based on those, keep a close eye on a few key metrics such as your server response time, the number of errors cropping up, and what areas those faults may lie in.

Generating complex scripts and runs along with the accompanying logic can be difficult. I suggest that you approach load testing incrementally. Start with a brute force test that will simply test your site under a continuously increasing stream of traffic.

As you gain experience, add on other elements such as variable behaviour, developing your scripts and logic over time.

Conclusion: Some is Better than None

When it comes to load testing, starting with the basics is better than not getting started at all. If you’re a beginner to all of this, do try to do your testing on an alternate mirror or offline where possible – avoid load testing a live site if you can!

If you’re just starting out now, make sure to create a record of your tests. Performance testing is a journey that should accompany the development of your site as it grows. The process can be tiring but remember, not having a record can make future assessments much more difficult for you.

This post is the second in a three-part series describing our investigationsinto scalability for a second screen application we built for PBS. You can readthe introduction here.This guide assumes you have a production server up and running. If you needhelp getting there, check out the final post in theseries for a guide toconfiguring a Node.js server for production.

If you read my harrowing tale on stresstesting, you may beinterested in conducting similar research for your own nefarious purposes. Thispost is intended to help others get a leg up stress testing Node.jsapplications–particularly those involving Socket.io.

I’ll cover what I consider to be the three major steps to gettingup-and-running:

- Building a client simulator

- Distributing the simulation with Amazon EC2

- Controlling the simulation remotely

The Client Simulator

The first step is building a simulation command-line interface. I’llnecessarily need to be a little hand-wavy here because there are so many waysyou may have structured your app. My assumption is that you have someJavaScript file that fully defines a Connection constructor and exposes it tothe global scope.

Simulating many clients in Node.js. Lucky for you, Socket.io separates itsclient-side logic into a separatemodule, and this can runindependently in Node.js. This means that you are one ugly hack away from usingthe network logic you’ve already written for the browser in Node.js (assumingyour code is nice and modular):

Now you can instantiate an arbitrary number of connections iteratively. Be sureto spread these initializations out over time, like so:

As I discussed in the previous post in this series, failing to take thisprecaution will result in heartbeat synchronization. I guess that sounds cool,but it can have unpredictable results on your measurements.

If your connection module is using Socket.io’s top-level io.connect methodto initiate a connection, you’ll have to make an additional modification. Bydefault, Socket.io will prevent the simulator from making multiple connectionsto the same server. You can disable this behavior with the 'force newconnection' flag, as in io.connect({ 'force new connection': true });.

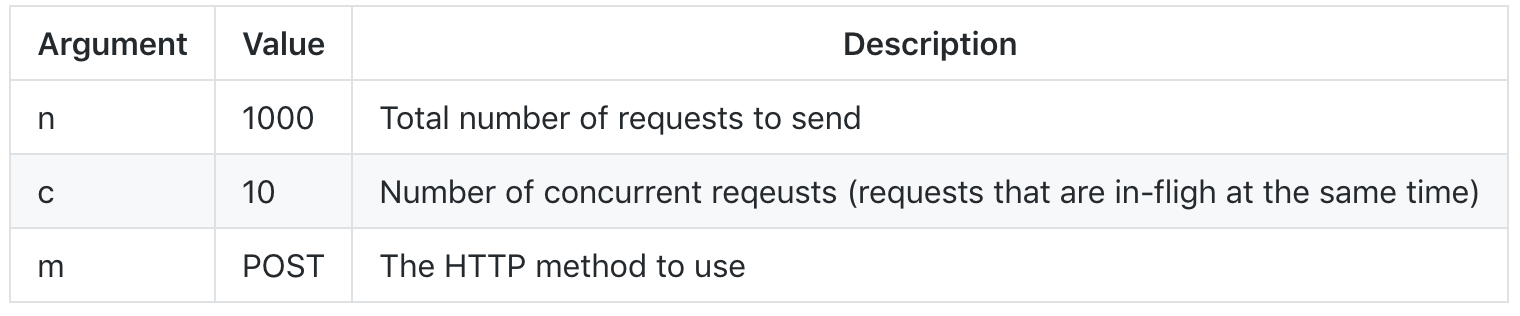

The above example makes use of a clientCount variable. This is just onesimulation variable you will likely want to manipulate at run time. It’s agood idea to support command-line flags for changing these values on the fly. Irecommend using the “optimist”library to help build a usablecommand-line interface.

Date collection. For another perspective on test results, you may decide toscript your simulator to collect performance data. If so, take care in themethod of data collection and reporting you choose. Any extraneous computationcould potentially affect the simulator’s ability to simulate clients, and thiswill lead to unrealistic artifacts in your test results.

For example, you may want to store the time that each “client” receives a givenmessage. A naive approach would look something like this:

Most Node.js developers maintain a healthy fear of any function call thatstarts with fs. and ends with Sync, but even the asynchronous version couldconceivably impact your simulator’s ability to realistically model hundreds ofclients:

To be safe, I recommend storing test statistics in memory and limiting thenumber of disk writes. For instance:

Now, you could have the simulator periodically write the data to disk andflush its in-memory store:

…but you still run the risk of simulation artifacts from conducting I/Ooperations in the middle of the procedure. Instead, consider extending yoursimulator to run a simple web server that responds to HTTP GET requests by“dumping” the data it has collected:

This approach allows for a clean separation of data gathering and datareporting. You can run your simulation for some period of time, pause it, andthen run the following command to get at your statistics:

Of course, this also introduces the overhead of running a web server. You willhave to weigh this against the performance impact of immediate I/O operations,and this is largely dependent on the amount of data you intend to collect (bothin terms of size and frequency).

Scripting clients. In some cases, having “dumb” clients that merely connectand wait for data will be enough to test the system. Many applications includecomplex client-to-client communication, so you may need to script more nuancedbehavior into the simulator. That logic is extremely application-specific whichmeans I get to “leave it as an exercise to the reader” to build a script thatmakes sense for your application.

Although you now have a mechanism to simulate a large number of clients on yourlocal machine, this approach would be far too unrealistic. Any tests you runwill likely be bottlenecked by your system’s limitations, whether in terms ofCPU, memory, or network throughput. No, you’ll need to simulate clients acrossmany physical computers.

Distributing with EC2

Taking a hint from Caustik’swork,I began researching details on using Amazon WebService‘s Elastic Compute Cloud (EC2) to distribute mynew simulation program. As it turns out, Amazon offers a free usage tier fornew users. The program lasts for a year afterinitial sign up and includes (among other things) 750 hours on an EC2 “Micro”instance. This is enough to run one instance all day, everyday, or (moreimportantly) 20 instances for one hour a day. All this means that you can runyour simulation on 20 machines for free!

The first thing you’ll need is an Amazon Machine Image (or “AMI” for short).This is a disk image from which each machine will boot. Amazon hosts an entireecosystem of user-made AMI’s, and you can likely find one that suits yourneeds. I recommend creating your own since it is so easy and you can never besure exactly what might be running on a home-made AMI. Just select a free AMIauthored by Amazon to begin. We’ll be using this as a base to create our owncustom AMI to be cloned as many times as we wish.

You can use many of the AMI’s offered by Amazon at no charge under the AWS freetier.

As part of this process, you will need to create a key pair for starting thisAMI. Hold on to this: you’ll need it to launch multiple instances in the nextstep.

By default, AMI’s do not support remote connections though SSH. This is theunderlying mechanism of our administration method (more on this below), soyou’ll need to enable it. Use the AWS ManagementConsole to create a custom security groupthat allows connections on port 22 (to make this easy, the interface has apre-defined rule for SSH).

In this example, the security group named “bees” has been configured tosupport SSH access.

Next, you’ll want to customize the AMI so that it can run your simulationimmediately after being cloned. You can install Node.js from your operatingsystems software repository, but please note that the version available may bewell behind latest. This has historically been the case with Debian and Ubuntu,for instance, which currently supply version0.6.19 despite the latest stablerelease being tagged at0.8.16. Installing Node.jsfrom source may be your best bet (if you need guidance on that, check out thispage on the Node.js wiki).

While you could use a utility likescp to copy your simulation code tothe AMI, I recommend installing your version control software (you are usinga VCS, right?) and fetching from your repository. This will make it much easierto update the simulation later on.

Currently, your simulation script is likely tying up the terminal. Whenconnected over SSH, this behavior would prevent you from issuing any othercommands while the simulation runs. There are a number of ways you can“background” a process, thus freeing up the command line. Since you havealready installed Node.js, I recommend simply using the “forever” utility fromNodejitsu. Install it globally with:

Now, when connected remotely, you can effectively “background” the followingcommand:

by instead running:

When you’re done, just run:

to cancel the simulator.

Npm Unit Test

Now that you’re able to run the simulation on this customized setup, it’s timeto save it as your own personal AMI. With your EC2 instance running, visitthe EC2 Dashboard and view the running instances. Select the instance you’vebeen working with and hit “Create Image”. In a few minutes, your custom AMIwill be listed in the dashboard, along with a unique ID. You’ll need to specifythis ID when starting all your simulator instances, so make note of it.

Administration

The last step is building a means to administer all those instances remotely.You’ll want to make running a test as simple as possible, and it can certainlybe easier than manually SSH’ing into 20 different computers.

There’s an old saying that goes, “There’s nothing new under the sun.” Besidessounding folksy and whimsical, it has particular relevance in the open-sourceworld: it means that someone else has already done your job for you.

In this case, “someone else” is the fine folks over at the Chicago Tribune.Back in 2010, they released a tool named “Bees with MachineGuns”.This was designed to administer multiple EC2 instances remotely. There’s onecatch, though: it was built specifically for the Apache Benchmark tool.

In order to use it to control your Node.js client simulator, you’ll need a moregeneralized tool. I made a quick-and-dirty fork of theproject to allow forrunning arbitrary shell commands on all the active EC2 instances (or inbeeswithmachineguns lingo, “all the bees in the swarm”). I’m no Pythonista,though, and I encourage you to fork it and help me clean itup!

There are a number of flags you will need to specify in order to get your beesoff the ground. These include the key pair for your custom AMI and the name ofthe custom security group you created. Type bees --help for details.

Here’s an example of how you might start up 10 bees:

Bear in mind that you are on the clock for as long as these instances arerunning, regardless of whether or not you are actually conducting your tests.Even with the free usage tier, you run the risk of being billed forlong-running instances. Once you are finished, do not forget to deactivatethe EC2 instances!

To play it safe, I recommend keeping the EC2 Management Console open duringthis procedure. This lets you keep an eye on all currently-running instancesand can help assure you that yes, they really are all off.

You can invoke your client simulator using the exec command along with theforever utility we built in to the AMI:

And if you ever want to change your simulator logic on the fly, you can use thesame API to pull the latest code:

Just remember that the next time you run your tests, you will bere-initializing machines from your custom AMI, so these on-the-fly patcheswon’t stick.

With Great Power…

Summing up, you now have a complete stress-testing framework ready to go:

- A command-line tool for simulating any number of clients

- A group of computers that will run this tool simultaneously

- A method to control those computers from the comfort of your swivelchair/throne/park bench

Now (finally) you’re ready to begin your stress test in earnest. If all this isnew to you, you might not have a production server to test. Sure, you couldtest your development machine, but we’re striving for realism here. Not toworry: in the final part of this series, I detail my approach to creating aproduction-ready Node.jsserver.